Real-World World-building: UCR Develops Versatile AI-Driven Robots for Heavy Industries Built on the NVIDIA Isaac Platform

UCR (Under Control Robotics) is developing rugged, multipurpose humanoid robots for tasks in heavy industries like construction, energy, and mining. UCR's robots aim to improve efficiency and worker safety by reducing workers' exposure to hazardous environments, assisting with physically demanding work, and automating repetitive tasks. In just under eight months, UCR built and deployed a system we call Moby—a robust robotic platform capable of carrying a 60-pound (27 kg) payload and navigating steep inclines and outdoor environments, all developed by four engineers in an 80 m² lab.

Moby walks on outdoor grassland

Based on the success of Moby’s ability to move freely throughout the world, UCR is developing its next-generation robot with manipulation capability to be unveiled next month. Robustly handling a diverse set of environments, these robots are well suited for applications like data acquisition, material handling, and other tasks in high-risk settings. UCR built a resilient body that houses a multi-purpose AI “brain,” equipped with vertically integrated hardware and software, ensuring that its robots thrive in the most challenging industrial environments.The ongoing rapid development of Moby is made possible by using NVIDIA's Three Computer Solution.

Bridging the gap between simulation and hardware with NVIDIA GPU-enabled Isaac Lab

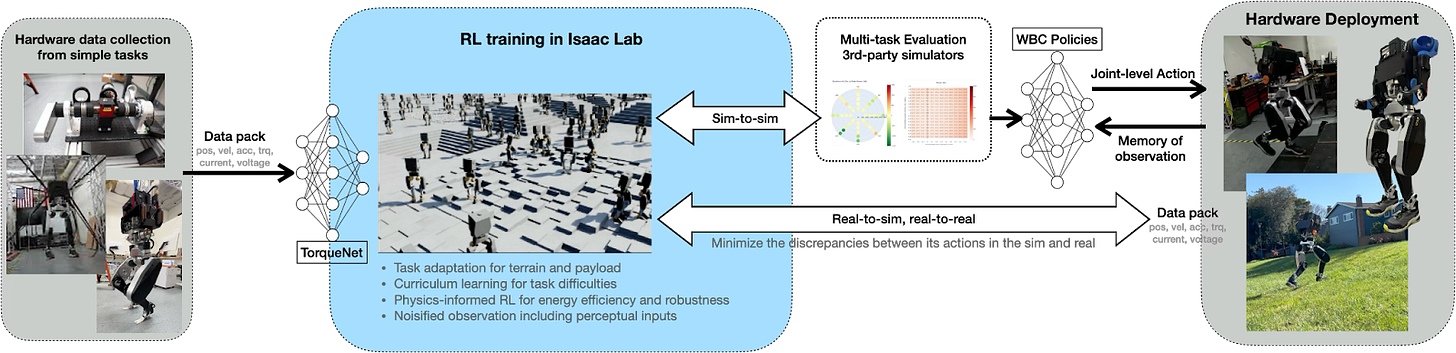

Deploying machine-learning models on physical hardware reveals multiple layers of discrepancies between the simulated robot, simulated world, and reality. These discrepancies can arise from variations in actuators, sensors, simulation environments, and inherent model inaccuracies. UCR addressed these challenges with a proprietary training pipeline built atop NVIDIA Isaac GR00T’s synthetic data generation pipelines for motion and manipulation, NVIDIA Isaac Lab and NVIDIA Isaac Sim [1]. UCR has achieved near zero-shot sim-to-real transferability (deployment success rate is 95%) through an iterative process that evaluates and mitigates model performance differences across variations in hardware and simulation environments.

UCR’s in-house training pipeline

Real2sim. In simulations, the physics model complexity is balanced with computational efficiency and the resulting time it takes to execute a simulation step. Typically, actuator dynamics are oversimplified in simulation settings, which results in important discrepancies impacting the sim-to-real transfer. UCR trained a network to seamlessly embed real-world actuator dynamics into the high-fidelity NVIDIA Isaac Sim environment [2]. The network is trained using data collected from actuators mounted on dynamometers, robots performing fixtured stretching (without contact), and plyometric routines to learn performance characteristics.

Sim2sim. The same physical laws can be simulated differently between simulations. A machine learning model might occasionally exploit quirks in a simulator, converging on a local optimum that may be infeasible in the real world. Such differences often become apparent when evaluated across multiple simulation platforms. UCR employs a comprehensive evaluation process to assess a policy’s performance, ensuring it can overcome the sim2sim gap as numerical methods vary—ultimately preparing the system to tackle the complexities of the real world.

Sim2real. Along with the popular practice of domain randomization in reinforcement learning to bridge the sim-to-real gap, UCR integrates the memory of inputs and outputs to help robots dynamically adapt to varying conditions. This approach enables the robot to autonomously identify terrain geometry, the mass and inertia of the payloads, and motor characteristics without needing explicit parameters. All this is achieved with a single end-to-end network that simultaneously estimates states, perceives, and controls. These are traditionally developed as separate modules. This strategy simplifies the system and can achieve higher generalization over both diverse external environments and internal hardware variations.

Real2real. How well a model can generalize across variations in hardware has real-world implications. These robots might be integrated with parts from various suppliers to handle real-world tasks. Ideally, the same software delivers consistent product performance across hardware variations and changes in working conditions. This typically requires exceptional performance and installation uniformity across actuators, sensors, and consistency of overall structural rigidity and mass properties. However, striving for such a level of hardware consistency can incur astronomical costs in large-scale manufacturing and render the robot unusable across varying working conditions. At UCR, we tackle this problem through continuous and comprehensive evaluation of model performance across hardware variations to identify the operational boundaries of the AI models and delineate these real-world characteristics in the training pipeline.

AI model that adapts to different payload and bodies

Hardware data is valuable yet sparse; once collected, it is reintegrated into the training pipeline using Isaac Lab and Isaac Sim. By incorporating real-world data into the design process, UCR enables reinforcement learning to realize the robot’s performance potential fully. This approach minimizes the gap between simulation and hardware performance, allowing for rapid iteration and robust learning. By continuously refining its models with hardware data, UCR ensures its systems remain resilient, accurate, and ready for the unpredictable disturbances while working in the heavy industry.

State of the Art Mobility

When developing Moby, UCR prioritizes application-essential performance over imitating human-like movement. This includes safety, energy efficiency, and agility rather than human gait characteristics such as stepping frequency, stride length, or contact sequence. The result is a mobility policy that leverages each motor to achieve system-level optimal performance. Moby is able to walk in all directions and walk across unstructured debris-filled floors, fit through tight passageways, climb steep inclines, and even walk cross-slope, all while rejecting disturbances. Notably some unplanned yet some human-like motions, such as foot rolling and lateral torso sway, emerged as a natural consequence of optimizing for energy efficiency and safety rather than through deliberate imitation. This observation suggests that these metrics could serve as a generalizable basis for reward shaping in mobility tasks.

Moby walks on ramps and unstable terrain

Omnidirectional walking

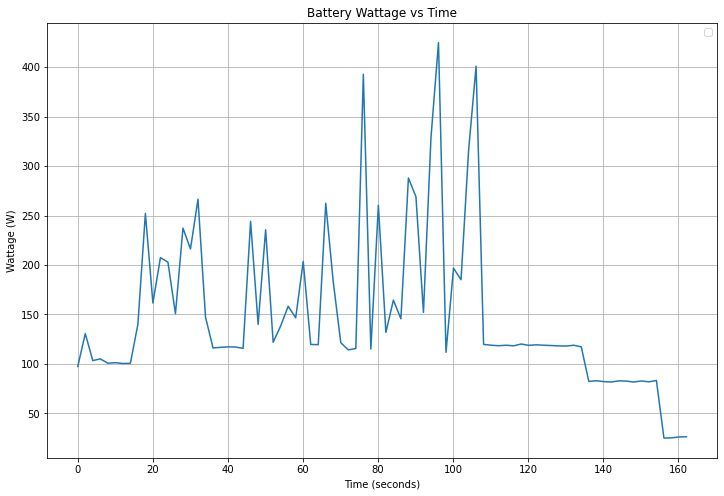

To operate in heavy industries, robots must be rugged, featuring heavy-duty structures to endure a wide range of temperatures, dusty/steamy/corrosive conditions, varying payloads, and operate for extended periods. Moby’s 72 Kg body moves with an average power consumption of 200W (similar to low-power usage appliances, such as TVs), enabling it to handle five to six-hour shifts with ease.

Moby's Power Consumption When Navigating Steep Ramp

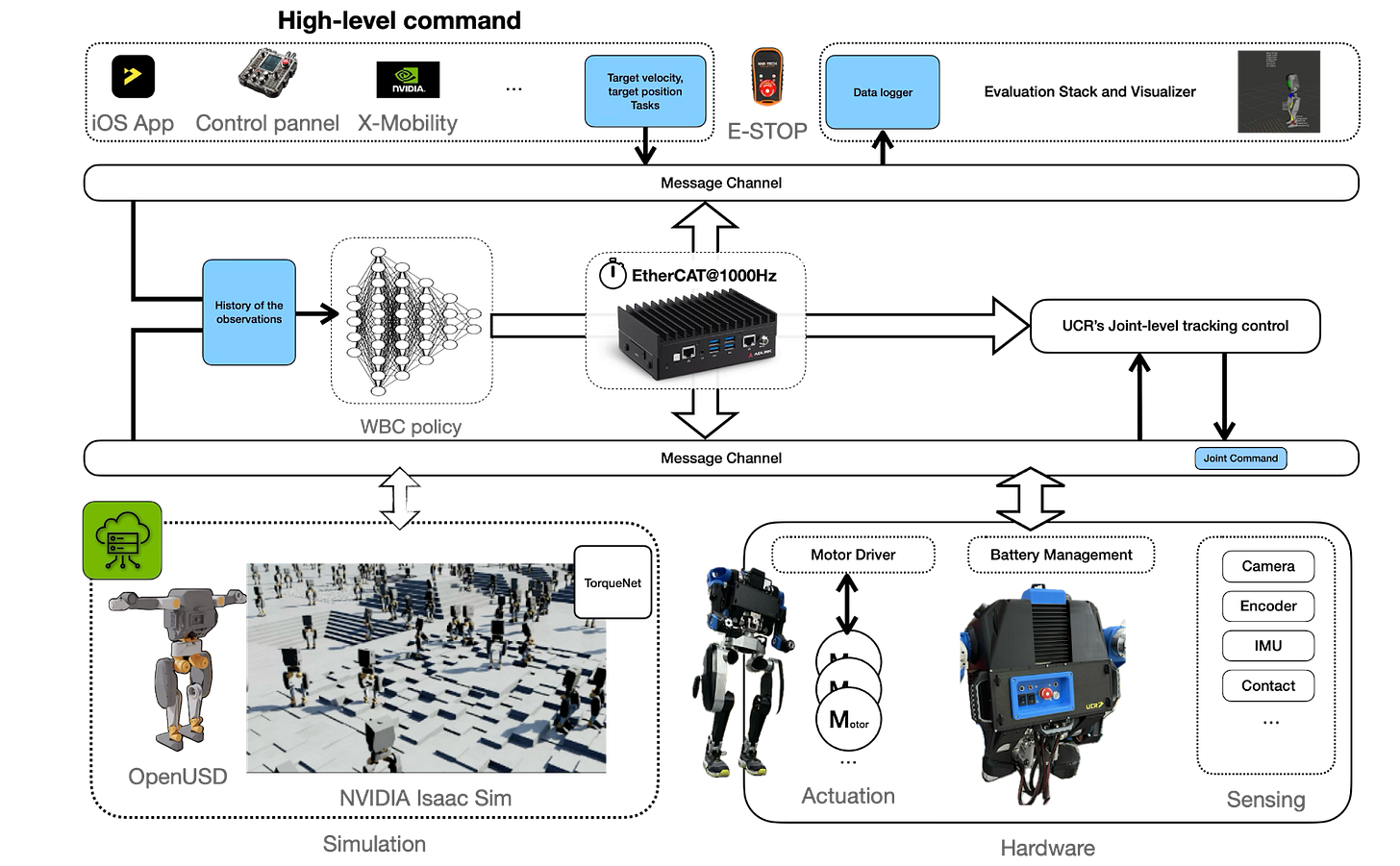

The ability to move freely and efficiently through diverse environments unlocks a world of possibilities. When given a high-level command, Moby intuitively coordinates its joints to maintain balance and precisely follow its intended path—whether navigating semi-structured indoor spaces with cluttered floors and narrow passages or traversing rugged outdoor terrain. UCR leverages NVIDIA X-Mobility, an end-to-end navigation model that uses world modeling, to provide continuous directional guidance until Moby reaches its destination. The modular yet cohesive integration of UCR’s state-of-the-art mobility with X-Mobility has shown real-world generalizability for industrial applications, including data acquisition, material handling, and assisting with high-risk, labor-intensive tasks while automating repetitive operations across critical industries.

A single NVIDIA Jetson computer powers the entire onboard software stack behind UCR’s multipurpose AI.

UCR’s entire onboard AI stack is executed on a single NVIDIA Jetson Orin edge AI computer. This includes UCR’s in-house built actuator and sensor drivers that handle real-time communication, system software that controls the behavior of the robot under different operating modes like booting up, safety halts and resetting, and the perception and model inference pipeline, which enables the robot to see, understand, and act. The extraordinary compatibility of NVIDIA CUDA enables the deployment of UCR’s software to the edge after development and evaluation using more powerful NVIDIA workstation GPUs, enabling an unprecedented development speed.

Real-world world-building

With a wide range of robotics companies embracing the challenge of reshaping our physical world, UCR is committed to improving safety and operational efficiency for the real-world applications that will profoundly transform our society, particularly those in critical industries like construction, mining, and energy, which form the backbone of our built world. With the help of NVIDIA’s ecosystem, UCR’s ambition to help build, shape, and expand the real world is within reach.

References

[1] Makoviychuk, Viktor, et al. "Isaac gym: High performance gpu-based physics simulation for robot learning." arXiv preprint arXiv:2108.10470 (2021).

[2] Hwangbo, Jemin, et al. "Learning agile and dynamic motor skills for legged robots." Science Robotics 4.26 (2019): eaau5872.